Linear Regression의 원리에 대해 가볍게 알아봤으니, tensorflow를 통해 간단한 Linear Regression을 구현해보고자 한다.

지난 번에 사용했던

값을 사용하고자 한다.

import tensorflow as tf

x_train = [1,2,3] #학습할 x값

y_train = [1,2,3] #학습할 y값

#Tensorflow 가 사용하는 Variable : Tensorflow가 자체적으로 변화시키는 변수 _ 학습하는 과정에서 변경시킴

W = tf.Variable(tf.random.normal([1]), name = 'weight') # Weight

#값이 하나인 array를 주게 됨

b = tf.Variable(tf.random.normal([1]), name = 'bias') #Bias우선 x값과 y 값이 있는 train data를 입력해주고, 가중치(Weight)가 될 W와 편향치(Bias)가 될 b값을 랜덤으로 설정해준다. (어차피 W,b 값은 학습을 반복하며 최적화된 숫자로 적합해갈 것이다.)

그 후 ,

# Only in Tensorflow 1.0

hypothesis = x_train * W + b

cost = tf.reduce_mean(tf.square(hypothesis - y_train))

optimizer = tf.train.GradientDescentOptimizer(learning_rate = 0.01)

train = optimizer.minimize(cost)

sess = tf.Session()

sess.run(tf.global_variables_initializer())

for step in range(2001):

sess.run(train)

if step % 20 == 0:

print(step, sess.run(cost), sess.run(W), sess.rub(b))이 코드를 바로 실행하게 되면 많은 오류가 생긴다.

GradientDescentOptimizer, minimze, Session 등의 함수 구성이 Tensorflow 1.0 버전의 방식이기 때문이다.

구글링을 하면, 다른 방식 (Keras) 를 사용해서 구하는 방식들은 있었지만, cost 함수를 구성하고 반복문을 통해 W와 b를 직접 업데이트 하는 방식들은 많지 않았다.

다행히도 function을 구성하여 W와 b 값을 갱신하는 코드가 있어서 참고하여 코드를 작성해보았다.

#Tensorflow 2.0

## Hypothesis 설정

@tf.function

def hypothesis(x):

return W*x + b

## Cost Function 계산

@tf.function

def cost(pred, y_train):

return tf.reduce_mean(tf.square(pred - y_train))

## 최적화

optimizer = tf.optimizers.SGD(learning_rate = 0.01)

## 최적화 갱신 과정

@tf.function

def optimization():

with tf.GradientTape() as g:

pred = hypothesis(x_train)

loss = cost(pred, y_train)

gradients = g.gradient(loss, [W,b])

optimizer.apply_gradients(zip(gradients, [W,b]))

## 최적화 반복

for step in range(1, 2001):

optimization()

#20 회마다 갱신 값 프린트

if step % 20 == 0:

pred = hypothesis(x_train)

loss = cost(pred, y_train)

tf.print(step,loss, W, b)

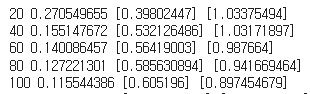

회차를 반복할 수록, Cost의 값이 매우 작은 값으로 수렴하였고, W 값은 1로, b값은 0으로 수렴하는 것을 확인할 수 있었다.

즉, H(x) = 1 x + 0 이라는 식에 가까워지는 것을 확인할 수 있었다.

'DataScience > Machine Learning Basic' 카테고리의 다른 글

| Multivariable linear regression (0) | 2022.02.21 |

|---|---|

| Linear Regression Cost Function & Gradient Descent Algorithm (0) | 2022.02.20 |

| Linear Regression (0) | 2022.01.25 |

| Tensorflow 기본 Operation (0) | 2022.01.21 |

| Tensorflow in Pycharm 그리고 Google Colab (0) | 2022.01.21 |